Return of the Right to Fail

Alexandros M. Goudas (Working Paper No. 3) January 2017

President Obama can be credited with starting, or at least helping to popularize, the push to increase US college completion. In 2009, Obama initiated the “completion agenda” (Field, 2015), and the target was for the US to become a leader in postsecondary graduation rates by 2020. Unfortunately, college graduation rates have not budged much since, and most of any increases came before Obama set this goal (para. 19–20). In fact, students who enrolled in 2009 had lower rates of completion due to the recession (Shapiro et al., 2016). Four-year institutions’ graduation rates are back up to 54.8%, but that is still below the prerecession rate of 56.1% (see the NSCRC’s annual graduation report here).

Nevertheless, it would be difficult to argue that the goal of increasing college completion is a bad idea. In fact, thousands of colleges, universities, and organizations have signed on to do their part, including the Bill and Melinda Gates Foundation, Complete College America (CCA), and the Community College Research Center (CCRC), among many others. Even if some reputable economists would argue that there is not a gap between skills and jobs, they would still agree with the idea that having more citizens with postsecondary degrees is a positive goal for the US.

The problem, however, is not with the end; as with most endeavors, the issue lies with the means. As with most reforms in society today, reformers seem to be only interested in short-term gains. In their attempt to increase graduation rates in the short term, some practitioners, policymakers, and legislators have been pushing to reduce and excessively reform remediation because they view remedial courses as a barrier.

Because reformers view remediation as an obstacle, they propose such solutions as acceleration and corequisites, some models of which place even the most unprepared students into college-level courses, with no evidence of an increase in completion rates. Other solutions include the CCRC recommendation to lower the college-level placement bar until almost 75% of students automatically place into college-level courses (Scott-Clayton & Stacey, 2015).

As outlined in a recent Inside Higher Ed article (Smith, 2016), the State of North Carolina adopted these placement recommendations and set the bar for college-level courses at a high school GPA of 2.6, well below the average national high school GPA of 3.0 (Nord et al., p. 14). As cited in Smith (2016), Elisabeth Barnett, a senior researcher at the CCRC, appeared to promote the idea that simply avoiding remediation is the goal when claiming that “‘the advantage of having other measures is you have various ways to get placed out of developmental education’” (para. 3). Anecdotally, educators in NC are noting that remedial course enrollments have declined by two-thirds, and many at-risk students are not doing well in college-level courses, especially those who have 2.6 to 3.0 HSGPA. There is discussion of modifying this reform to reset the bar at 3.0.

On a side note, while Barnett (Smith, 2016) argued that a “’single test isn’t going to be predictive [of student success]'” (para. 3), she failed to note that moving to any one of “various ways” should be no more predictive. This is because having a choice of multiple measures from which to choose is really only using a single measure. This practice should in fact be called multiple single measures. Multiple measures, as originally recommended by the CCRC (Scott-Clayton, 2012) should combine two or more measures statistically to result in a single, more accurate placement measure. In effect, then, the recommendation for using multiple single measures seems only to be designed to lower the placement bar and increase enrollment in college-level courses. In addition to North Carolina, Ivy Tech, the community college system for the entire state of Indiana, also uses 2.6 HSGPA and above to place students into college-level courses, and it is a prime example of the increasingly common use of multiple single measures for placement.

Like Barnett, proponents of these reforms believe that once most remedial courses are eliminated or severely reduced, and most students start in college-level courses instead (i.e., they think that “the default placement for the vast majority of students” should be college-level), then more students will be able to graduate. What these decisions do instead, however, is to essentially put into practice the decades-old philosophy of the right to fail. This short-term approach may in fact be harming hundreds of thousands of at-risk students across the nation while not improving graduation rates, the purported goal of all recent nationwide reforms.

One of the best overviews of the debate of the right to fail can be found in an article by Hadden (2000), published in the Community College Journal of Research and Practice. Referencing Roueche and Roueche’s book (1993), which recommended mandatory placement into remediation as a best practice, Hadden cited research showing that most students in the past chose to bypass remediation to their detriment. Hadden, based on Roueche and Roueche (1993; 1994) and others, also suggested that as a result of the influx of new and more underprepared students into the community college system in the 1960s and 1970s, there was a movement in the 1980s to enforce standards for entrance into gateway courses. Thus mandatory placement into and out of remediation became more common. (For additional information on how remedial courses came be more commonplace, a good overview of the history of remediation can be found here.)

It is extremely important to understand that these original recommendations were created and enforced to protect at-risk students. Promoters of mandatory placement believed that the right to fail was harmful for incoming college students, especially at-risk students, and they used data to support their recommendations. For example, Hadden (2000) summarized several studies to argue that at-risk students perform better with mandatory remediation:

McMillan, Parke, and Lanning (1997) cite studies by Boylan (1983), Kulik, Kulik, and Schwalb (1983), and Roueche, et al. (1984) and assert that most studies find that ‘students who successfully complete recommended remedial/developmental courses perform as well as or better than college-prepared students in terms of grade point average, retention, and program completion’ (pp. 26–27). Roueche and Roueche (1993) also confirm that students are better served by mandatory assessment and placement (p. 252). (p. 830)

Hadden (2000) also noted that it is an irony that no one balks when institutions enforce prerequisites for higher-level courses such as Chemistry, Biology II, or Calculus. Prerequisites are a form of mandatory placement into courses that occur sequentially before other courses. However, when remediation is discussed as a prerequisite, then

colleges hesitate to mandate placement for entry-level college courses—a place that may be the most crucial point for mandatory placement—despite research and reports that indicate the success rate of students who remediate is higher than those students who waive remedial placement and register for college-level courses (Mitchell, 1989; National Association for Developmental Education 1998; Weissman, Silk, & Bulakowski, 1997). (p. 828)

It is clear that many in higher education do not recognize that remedial courses are tiered, sequential courses which lead to gateway college-level courses.

In one of the greatest ironies and reversals in education today, however, the Southern Education Foundation (SEF), which has the laudable mission and philosophy of supporting at-risk students, has pushed for such reforms as acceleration and corequisites in order to increase access to college-level courses for students of color and students in poverty. On both the title page of “Not Just Faster: Equity & Learning Centered Developmental Education Strategies” and again subsequently, Jones and Assalone (2016) have made this remarkable claim:

Reforming DE is one of higher education’s most critical equity imperatives across institutional type. Consequently, the current push in higher education to make college level, credit-bearing courses more accessible to all students, but especially students of color and low-income college students, is the single most significant action being taken to dismantle structural inequality in higher education [emphasis added]. (p. 7)

Remarkably, the SEF employed the argument that putting more underprepared students into college-level courses will help reduce the gap between minorities and whites and the poor and wealthy. This argument only works if these reforms have actually been shown to increase passrates and graduation rates, especially for students of color.

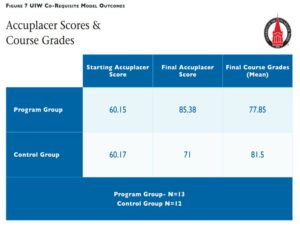

Unfortunately, recent research, including studies the SEF cited in their own paper (Jones & Assalone, 2016), has shown otherwise. First, in support of corequisites, the SEF showcased a study with an extremely low number of participants (n = 13 in the intervention group and n = 12 in the control group). There are well documented problems with low sample sizes like this in studies, and that is without the added problem of p-hacking. Even with those low numbers, their intervention showed no difference in Accuplacer scores or grades compared to their control group (there is no statistical difference between an Accuplacer 85 and 71 with numbers this low), and it appears there was no statistical analysis completed as well (Figure 7, p. 15).

It is quite surprising that this organization would use this quality of data to support a claim that states this type of reform is part of “the single most significant action being taken to dismantle structural inequality in higher education” (p. 7).

Looking at CCRC research on corequisites, similar problems can be found. In a recent analysis of a state-wide corequisite initiative in Tennessee, Belfield, Jenkins, and Lahr (2016) cited hypothetical numbers, ones representative of the actual data, that showed an overall increase in college-level passrates (20% to 60%), but they also showed a 100% increase in college-level failrates (20% to 40%) (p. 4). The absolute numbers are not real and are used only as an example, as they stated, but they also noted that actual passrates are quite low for some students in the corequisite model: “[F]inally, while pass rates increased substantially for college-level math and writing under the corequisite model, many students who took corequisite courses did not pass—nearly half in math” (p. 10). This means that there is a 50% college-level failrate among math students in the corequisite model, whereas under the traditional model, only 10% of students failed in college-level math. That is a five-fold increase in failrates.

Moreover, even though no papers have highlighted the increased cost of corequisites, Belfield et al. (2016) noted that “corequisite remediation requires substantially more resources for the initial semester for each cohort of new students” (p. 3). The initial research on the corequisite model (Jenkins et al., 2010) also revealed that it cost double the traditional model: “In examining the costs of the ALP approach, the college found that ALP costs almost twice as much per year as the traditional model, in which developmental courses are taught separately from the college-level courses” (p. 3). No study to date has found that corequisites have improved graduation rates. In fact, using traditional remediation combined with an array of other supports, CUNY’s ASAP model is the only research that has shown an increase in completion metrics, and it has more than doubled remedial graduation rates (Scrivener et al., 2015).

For double the cost, an increase in college-level passes, an increase in college-level fails, and no increase in graduation rates, corequisites as a means to improve at-risk student outcomes hardly seems to be the panacea the CCRC, the SEF, and others are claiming it is. Even the positive increase in actual passrates from Tennessee study (Belfield et al., 2016) on corequisites—31% to 59% for writing, and 12% to 51% in math (p. 5)—relied on a slight trick in data analysis. Therefore, when researchers claim that passrates in college-level courses double or triple, they are not quite comparing apples to apples.

How this trick works is that researchers track students who originally start in remediation for one or more semesters until a certain number passes the respective college-level gatekeeper course. That is the control group. In the intervention group, they put all students into that respective college-level course in the first semester, with some type of support, ranging from self-paced computer modules to an entire extra class by the same instructor (it makes a large difference which is utilized). Then they compare the two groups’ passrates in the college-level course. That means they are comparing the passrates of students who complete two or three semesters of college to students who only complete one semester. Sometimes researchers even analyze total passrate numbers and not percents.

When analyzed using these methods, almost any intervention would result higher gateway passrates because of attrition alone in the control group. Data on college retention rates show that less than 50% of incoming students at two-year public colleges return their second year (National Student Clearinghouse Research Center, 2015). There are numerous other methodological problems when comparing these two groups’ gatekeeper passrates because in many cases corequisite remedial students have half the student-teacher ratio and double the time on task, two important interventions that traditional remedial students in the control groups did not receive (Jenkins et al., 2010). An extensive summary of the evidence of corequisites and the types of variations being implemented can be found here.

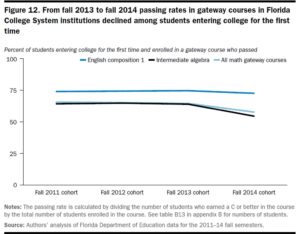

Other reforms which essentially promote the right to fail have popped up across the nation, most of which have equally disappointing results. For example, the State of Florida decided to make remediation optional. After such a decision, one should expect that more students would enroll in college-level courses, a few more might pass overall (especially when calculated as the CCRC does above), yet many more would fail college-level courses than before. This is exactly what has happened. The newly-published research demonstrates that the higher rate of enrollment in college-level courses causes a decline in the percent passing those courses (Hu et al., 2016, Figure 12). Essentially, more are taking college-level courses and more are failing them. This is exactly what Roueche and Roueche (1993; 1994) were cautioning against.

What is interesting is that remedial passrates went up after the change. This make sense, actually. Many remedial students who opt to take college-level courses are probably not prepared for them simply because we as humans are not very good at assessing our own abilities. Fascinating and well-documented research supports this. In fact, it took two psychologists (one of whom eventually won the Nobel Prize in Economics) to expose flaws in the economics tenet that we are all rational beings making well-informed decisions in a free-market economy. Kahneman and Tversky’s lessons of irrationality apply to education as well. For instance, if a student does not have to take a remedial course and still takes it, that student is most likely a self-regulated learner, one of those students who will probably be successful due to noncognitive traits.

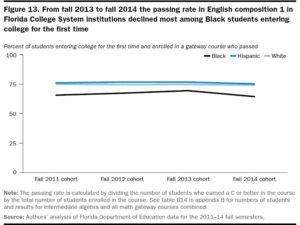

Most importantly, it turns out that allowing students to choose to bypass remediation harms Black students most. In several of the charts from the research on Florida, African Americans saw the largest declines in passrates (Hu et al. 2016, Figures 13, 14, and 15). Again, for decades now experts in the field have been recommending that students should not have the right to fail because it harms them. This is more evidence that they are correct.

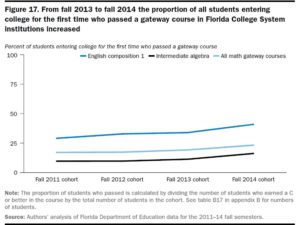

On a side note, the authors of this IES study (Hu et al., 2016) concluded that remediation is a barrier because of the proportion of students passing gatekeeper courses went up slightly. This may be confusing causation with correlation, first, because the increase in college-level passrates (Figures 17, 18, and 19) may be due to the recession effect abating, and second, because it is only one fall semester of data being compared to three prior fall semesters. There are a great deal of other factors affecting passrates in one semester (the economy, for one; different populations entering college, for another) to be able to attribute a very slight increase in overall passrates in college-level courses to the State of Florida’s decision to make remediation optional. Even with the slight increase, incoming student passrates for college-level courses in Florida range from 30% in English to 15% in math. Perhaps once Florida attracted national attention for their remediation law, it might have influenced results, or perhaps a type of regression to the mean is playing out.

Finally, as one can see in Figure 17 below (Hu et al., 2016), the increase in passrates from 2011 to 2012 for English Composition I is very similar to the one that occurred from 2013 to 2014. It is difficult to state with certainty that remediation is a barrier after such a small increase.

Clearly what we do not see here in the Florida data is a massive uptick in college-level passrates for at-risk students. At best we see mixed results. In fact, no long-term increase in graduation rates have resulted from any reforms designed to place more students into college-level courses, and it can be predicted that they will not rise dramatically in the near future. The most likely cause of increases in graduation over the past decade is the decrease in low-income students enrolling in college (Nellum & Hartle, 2016). What has resulted, however, is slight increases in conditional college-level course passrates at the cost of more college-level failures. Failing a college course carries with it more negative consequences because it affects students’ GPA and perhaps their financial aid. Moreover, it makes it even more difficult to raise that GPA afterward.

Should institutions sacrifice many underprepared students just to allow a few more students to pass college-level courses? Should they simply open up the college-level classroom to almost all students with little to no support, as the CCRC recommends, and North Carolina, Indiana, and others have adopted? Is this be the best way to decrease the gaps between students of color and whites, between the poor and the wealthy? As opposed to the SEF’s claim, doing this appears to harm at-risk students most in the short term, and it does not improve graduation rates in the long term.

Why then does the idea of the right to fail persist? There is most likely a spectrum of thought. Some people truly believe that putting more students into college-level courses, with or without some type of support, is a positive reform. Again, the data are mixed, but we do know that many more students are failing college-level courses with this approach. Most likely, these individuals would not say that this approach is indeed embodying the right to fail. I would argue that without providing thoughtful and more extensive support networks and resources for underprepared students, the institutions that simply put more students into college-level courses are in fact endorsing the belief of the right to fail.

For others on the opposite side of the spectrum, particularly Complete College America and similar groups, eliminating remediation is their goal. Their inflammatory rhetoric and lack of data confirm this (Complete College America, 2012). Though they would not admit it publicly, they probably believe any reform that puts more students into college-level courses and spends less on remediation is a good reform. Thus they fully endorse the right to fail.

Only until it is commonly accepted that many students do not get the education they deserve in K-12, society will not serve incoming college students as they should be served. If we truly value poor students and minorities, we may wish to step back from the de facto “right to fail” movement and reconsider new reform policies which automatically place students into college-level courses. Instead of attempting to remove remediation completely, or fast-tracking it so much that essentially students are just taking college-level courses without support, we need to instead address the various problems at-risk students face more holistically. We need to identify and eliminate the actual barriers remedial students face and not knowingly allow students to take courses they are unprepared for.

References

Belfield. C. R., Jenkins, D., & Lahr, H. (2016). Is corequisite remediation cost effective? Early findings from Tennessee. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/media/k2/attachments/corequisite-remediation-cost-effective-tennessee.pdf

Complete College America. (2012). Remediation: Higher education’s bridge to nowhere. Bill & Melinda Gates Foundation. https://eric.ed.gov/?id=ED536825

Field, K. (2015, January 20). 6 years in and 6 to go, only modest progress on Obama’s college-completion goal. The Chronicle of Higher Education. https://www.chronicle.com/article/6-Years-in6-to-Go-Only/151303

Hadden, C. (2000). The ironies of mandatory placement. Community College Journal of Research and Practice, 24, 823–838. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.580.4344&rep=rep1&type=pdf

Hu, S., Park, T. J., Woods, C. S., Tandberg, D. A., Richard, K., & Hankerson, D. (2016). Investigating developmental and college-level course enrollment and passing before and after Florida’s developmental education reform (REL 2017–203). U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory Southeast. https://ies.ed.gov/ncee/edlabs/regions/southeast/pdf/REL_2017203.pdf

Jenkins, D., Speroni, C., Belfield, C., Jaggars, S., & Edgecombe, N. (2010). A model for accelerating academic success of community college remedial English students: Is the accelerated learning program (ALP) effective and affordable? (CCRC Working Paper No. 21). Community College Research Center, Teachers College, Columbia University. http://ccrc.tc.columbia.edu/publications/accelerating-academic-success-remedial-english.html

Jones, T., & Assalone, A. (2016). Not just faster: Equity and learning centered developmental education strategies. Southern Education Foundation. https://files.eric.ed.gov/fulltext/ED585871.pdf

National Student Clearinghouse Research Center. (2015). Snapshot report persistence-retention (Snapshot Report 18 ). https://nscresearchcenter.org/wp-content/uploads/SnapshotReport18-PersistenceRetention.pdf

Nellum, C. J., & Hartle, T. W. (2016). Where have all the low-income students gone? American Council on Education. http://static.politico.com/49/73/624217574fc19fbe6d4d3a0100d0/aces-membership-magazine-article-on-low-income-students.pdf

Nord, C., Roey, S., Perkins, R., Lyons, M., Lemanski, N., Brown, J., & Schuknecht, J. (2011). The nation’s report card: America’s high school graduates (NCES 2011-462). U.S. Department of Education, National Center for Education Statistics. http://nces.ed.gov/nationsreportcard/pdf/studies/2011462.pdf

Roueche, J. E., & Roueche, S. D. (1993). Between a rock and a hard place: The at-risk student in the open-door college. American Association of Community Colleges.

Roueche, J. E., & Roueche, S. D. (1994). Climbing out between a rock and a hard place: Responding to the challenges of the at-risk student. Leadership Abstracts, 7(3). http://files.eric.ed.gov/fulltext/ED369445.pdf

Scott-Clayton, J. (2012). Do high-stakes placement exams predict college success? (CCRC Working Paper No. 41). Community College Research Center, Teachers College, Columbia University. http://ccrc.tc.columbia.edu/media/k2/attachments/high-stakes-predict-success.pdf

Scott-Clayton, J., & Stacey, G. W. (2015). Improving the accuracy of remedial placement. Community College Research Center, Teachers College, Columbia University. https://ccrc.tc.columbia.edu/media/k2/attachments/improving-accuracy-remedial-placement.pdf

Scrivener, S., Weiss, M. J., Ratledge, A., Rudd, T., Sommo, C., & Fresques, H. (2015). Doubling graduation rates: Three-year effects of CUNY’s Accelerated Study in Associate Programs (ASAP) for developmental education students. MDRC. http://www.mdrc.org/sites/default/files/doubling_graduation_rates_fr.pdf

Shapiro, D., Dundar, A., Wakhungu, P. K., Yuan, X., Nathan, A., & Hwang, Y. (2016). Completing college: A national view of student attainment rates – fall 2010 cohort (Signature Report No. 12). National Student Clearinghouse Research Center. https://nscresearchcenter.org/wp-content/uploads/SignatureReport12.pdf

Smith, A. A. (2016, May 26). Determining a student’s place. Inside Higher Ed. https://www.insidehighered.com/news/2016/05/26/growing-number-community-colleges-use-multiple-measures-place-students